The coefficients are coef(boston_fit, 12) # (Intercept) zn indus chas nox

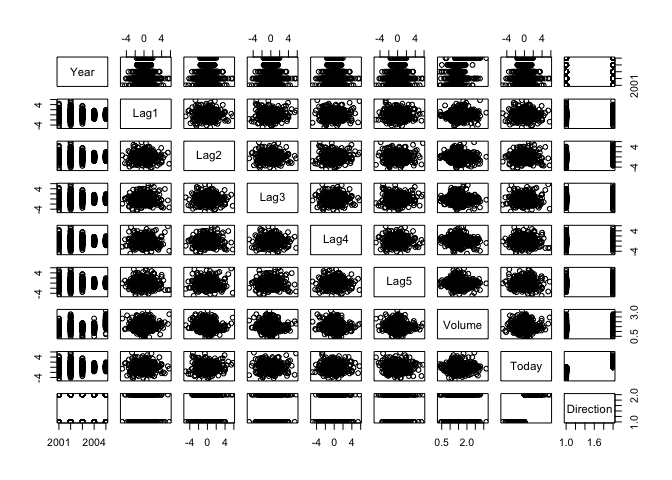

Which.min(cv_boston_mean) #It is a 9 variable model # 12Ĭross validation has selected a 12 variable model. Also, i have created a repository in which have saved all the python solutions for the labs, conceptual exercises, and applied exercises. , data = Boston, nvmax = n_var)īoston_pred = predict(boston_fit, Boston, id = j)Ĭv_boston = mean( (Boston$crim - boston_pred)^2 )Ĭv_boston_mean <- apply(cv_boston, 2, mean) I have been studying from the book 'An Introduction to Statistical Learning with application in R' for the past 4 months. N_var <- 13 #Boston does not have categorical variablesįolds <- sample(1:k, nrow(Boston), replace = TRUE)Ĭv_boston <- matrix(NA, k, n_var, dimnames = list(NULL, paste(1:n_var)))īoston_fit = regsubsets(crim ~. In this exercise we will use the Boston data set from the MASS library and try to predict per capita crime rate. Scale_y_continuous(breaks = seq(4, 20, by = 4))Īs suggested by the authors of Introduction to Statistical Learning, this is very similar to the MSE curve above. We will briey cover the topics of probability and descriptive statistics, followed by detailed descriptions of widely used inferential. Labs(x = "Number of variables", y = "Distance between estimated and true coefficients") + Introduction These notes are intended to provide the student with a conceptual overview of statistical methods with emphasis on applications commonly used in pharmaceutical and epidemiological research. Gareth James Department of Information and.

We assume we have a response variable \(Y\) and a feature matrix \(X\) of type \((n, p)\) (that is, \(n\) observations -rows- and \(p\) features -columns-). This book aims to be a complement to the.

An introduction to statistical learning exercises how to#

This notebook is about model selection and regularisation for linear regression. R code covering the Introduction to Statistical Learning book - reproducing examples, figures, solving exercises. 211 CHAPTER 6: AN INTRODUCTION TO CORRELATION AND REGRESSION CHAPTER 6 GOALS Learn about the Pearson Product-Moment Correlation Coefficient (r) Learn about the uses and abuses of correlational designs Learn the essential elements of simple regression analysis Learn how to interpret the results of multiple regression Learn how to calculate and interpret Spearman’s r, Point. As it is a learning exercise, there may be errors. We know the shape of \(F_n\) explicitly (it assigns mass \(\frac\), there is no closed form for the standard error, so the bootstrap method really is quite handy.Solutions to Exercises of Introduction to Statistical Learning, Chapter 6 Guillermo Martinez Dibene 30th of April, 2021 These pages were put together to maximise my learnings from the book.

and the R exercises can be very useful to the more practically-minded rea A. In this method, we assume we have a sample \(X_1, \ldots, X_n\) of independent random variables following the same distibution \(F.\) We then approximate the variance of \(F\), denote \(\sigma_F^2,\) using the “plug-in” statistic of \(F\), in this case, the “empircal distribution function” which we denote by \(F_n\) (this distribution depends on the given sample). An Introduction to Statistical Learning provides an accessible overview of.

0 kommentar(er)

0 kommentar(er)